I have been a big fan of UIM/P for a long time. I blogged vastly on the subject and was pleased to receive an invitation to take part in new version of BETA (codename Nimbus) testing. I would like to thank EMC UIM/P team for the privilege and am looking forward to cooperate with them in the future.

OK, lets get started!

The following new features will be available in UIM/P Nimbus:

- UIM/P to manage remote Vblock;

- FAST VP support for VMAX, VNX;

- Support for Shared Storage;

- VPLEX support;

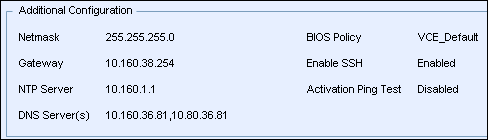

- Configure NTP and DNS Server(s).

Deployment/Upgrade

Deployment

UIM/P deployment is almost identical to the previous versions. See “Install and configure UIM/P”

There are two new options that were not available before:

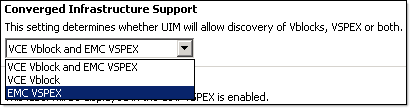

- UIM/P can now discover VCE Vblock and EMC VSPEX or both:

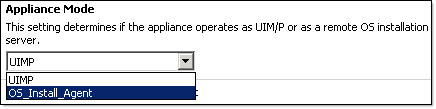

- UIM/P has two operation modes: UIMP and OS_Install_Agent.

UIM/P mode is the same mode that we used to, OS_Install_Agent – UIM/P virtual appliance acts as OS installation agent at remote datacenter. This mode has a limited number of services enabled and does not have a web interface. If you try to connect to it, you will get an “UIM/P Unavailable, Error 503” error message. Don’t be alarmed, it is an expected behaviour.

If you wonder if it is possible to swap between the modes, yes, it is. If the remote datacenter network connectivity is no longer available, or due to any other reasons you would like to manage Vblock using locally, you can change the UIM/P mode and start using it. You would have to start from scratch as UIM/P database will be empty. Use UIM/P Service Adoption Utility (SAU) to adopt Vblock / import the services. UIM/P needs to be powered off to change the mode.

You install UIM/P in UIMP mode in one datacenter and UIM/P in OS_Install_Agent mode in all remote datacenters.

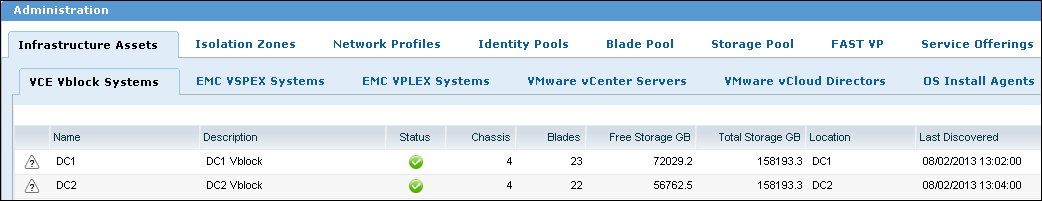

UIM/P Nimbus Administration tab. New interface. Multiple Vblock Management.

This is how remote Vblock management works:

- Deploy UIM/P in UIMP mode in the main datacenter;

- Deploy UIM/P in OS_Install_Agent mode in all remote datacenters and perform the following tasks;

- Upload and import OS installation ISO image;

- Change

homebase.adminpassword (this password will be used in step 3);

RemoteUIM001:~ # cd /opt/ionix-uim/tools

RemoteUIM001:/opt/ionix-uim/tools # perl password-change.pl

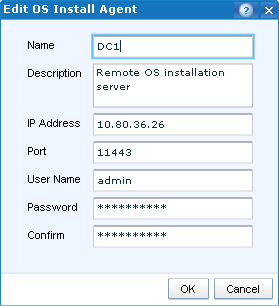

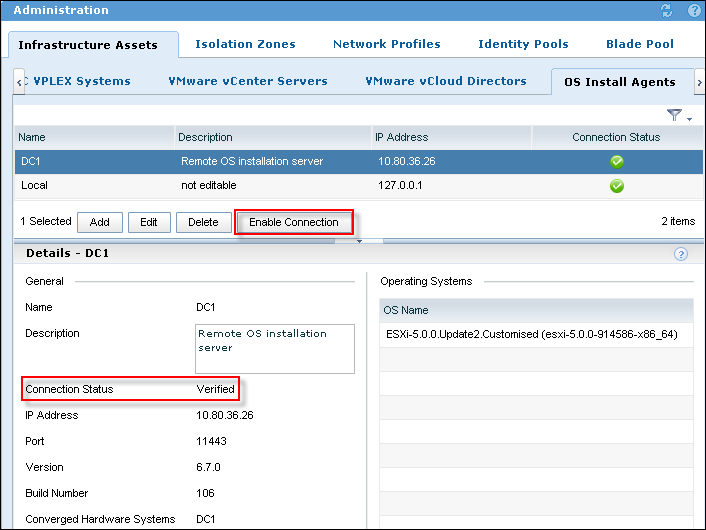

- Login to the UIM/P, navigate to Administration –> Infrastructure Assets –> OS Install Agents and click Add;

- Fill in remote OS Agent details (admin password is the homebase.admin password that you changed in step 2.b) and click OK;

- Click Enable Connection and verify Connection Status.

This configuration allows one UIM/P VA to manage multiple remote Vblocks.

Upgrade

UIM/P 3.1.x or higher is supported for in-place upgrade to UIM/P Nimbus. The procedure is the same as described in this blog post: “HOW TO: Upgrade EMC Ionix Unified Infrastructure Manager/Provisioning (UIM/P)“. Please be patient, in my environment it took 45-50 mins to perform UIM/P 3.2.0.1 Build 661 upgrade.

FAST VP Support

- FAST Policies on arrays discovered by UIM/P

- Apply FAST policies to block storage (instead of storage Grade)

- Configuration Pre-requisites

- Vblock 300 @RCM 2.5+

- FAST VP Enabled

- Mixed drive types in pools

- Vblock 700 @RCM 2.5+

- FAST VP Enabled

- FAST VP tiers created and associated to Thin Pools

- FAST VP policies created and associated to existing VP tiers

- Optional VPLEX Metro (for above configuration)

- Matching FAST Policy names & initial tiers across arrays

- Matching pool names for VNX FAST Policies across arrays

- Vblock 300 @RCM 2.5+

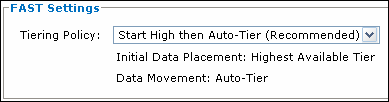

In previous versions of UIM/P you did not have a choice – all LUNs were configured as thin LUNs (Also see my post “EMC UIM/P and Thick LUNs“) with the following FAST VP tiering policy:

It will probably work in most environments but may put an unnecessary load on the array. The Initial Data Placement is a killer. Lets say you added a couple of new LUNs, configured the datastores and started to build VMs or copy VMs. What is going to happen? New or copied VMs will be placed on the highest available storage tier within the pool. This is not what you would necessary want. Well, in UIM/P Nimbus you can finally configure the storage tier where the LUN will be allocated from.

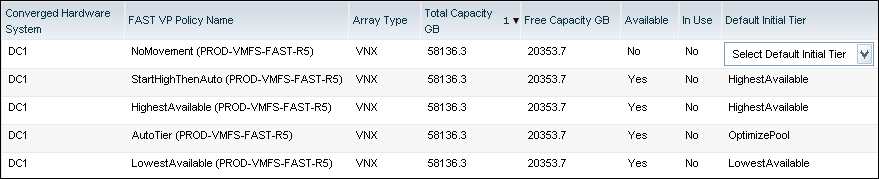

Here is an example: I have a storage pool with all three tiers: Extreme Performance, Performance and Capacity. UIM/P automatically configures the following FAST VP policies: Start High then Auto-Tier, Auto-Tier, Highest Available Tier, Lowest Available Tier and No Data Movement. The Default Initial Tier for NoMovement policy needs to be configured for it to be available.

Instead of provisioning the LUNs and then changing the tiering policy, you can configure them in UIM/P:

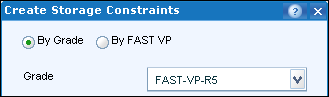

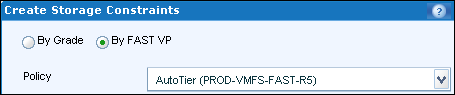

When you configure Service Offering constraints, you have a choice to allocate storage either by Grade or by FAST VP policy:

The Service FAST1 has the following storage provisioned:

FAST1_SHTA-HA – Datastore created from Start High Then Auto-tier FAST policy – On the storage array the Default initial tier is Highest Available.

EMC VSI screenshot confirms the datastore/storage tier placement:

Shared Storage Service

In previous versions of UIM/P we could not share storage between two or more service offerings / clusters unless you manually configure LUN(s) on the storage array and present it to multiple clusters / storage groups. UIM/P Nimbus introduces a new type of Service – Shared Storage Service. This type of service allows you to create and share storage between multiple Standard Services (BTW, from now on the old type of Service that we used to create will be referred to as “Standard Service”).

N.B. Shared storage is Block Only.

The use cases for Shared Storage Services:

- Datastore to share Templates / ISO images;

- Shared Diagnostic partition (more info on this is in this post “Configure Shared Diagnostic Partition“);

- SRM placeholder datastore;

- Shared datastore that can be used to facilitate Virtual Machine migration between clusters;

- etc… (let me know if you use it for anything else).

Configure Shared Storage Service:

- UIM/P –> Administration –> Service Offerings.

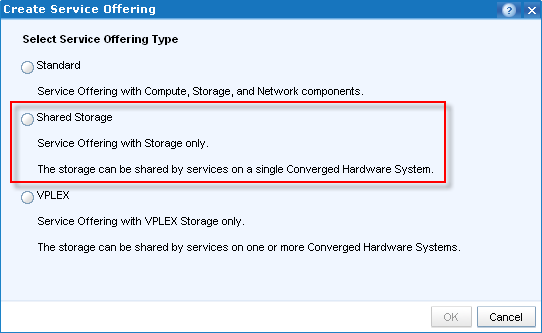

As you can see from the screenshot below, UIM/P Nimbus can create three types of Service Offerings: Standard (the old type of Service), Shared Storage and VPLEX:

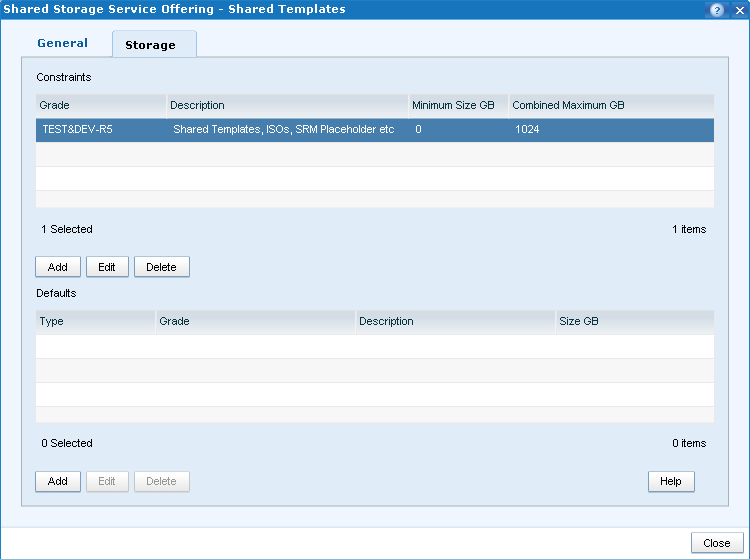

- Apart from the service name, Storage is the only resource which can be configured in Shared Storage Service offering:

Add storage from a Grade (FAST VP policies do not come into play in SSS) and configure min and max;

There is no need to configure Storage Defaults. When you create a Service from Shared Storage Service offering, you will be creating LUNs/datastores per customer requirements and therefore there is no need to configure Storage Defaults. All Shared Storage Services will be carved out of the Storage Grades that you assigned to it and will be within the limits.

- Make the Service Offering available;

- Create a new Service from the SSS

- Configure Shared Storage Service name, select Vblock

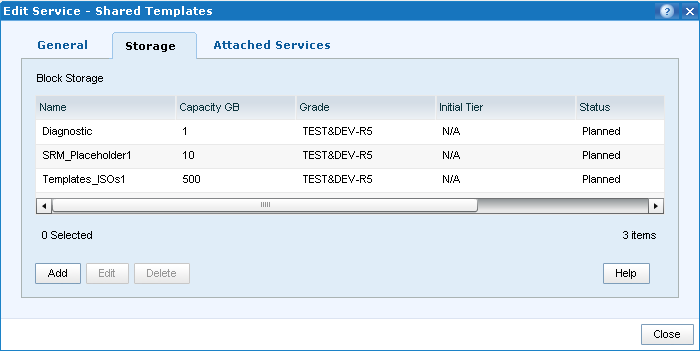

- Configure storage that will be presented to the Standard services:

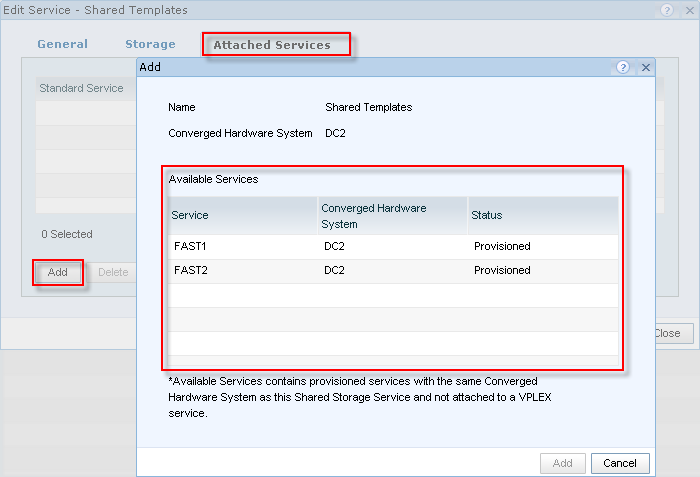

- Attach the Standard Services to the SSS:

Standard Services must be: Configured on the same Vblock, Provisioned, Activated and synchronised with vCenter!

In this example, there are two Standard Services that I configured during FAST VP testing: FAST1 and FAST2. Highlight the services and click Add.

Click Close; - Click Provision button. This will create the Shared Storage LUN(s) and add them to the Standard Service Storage Groups on the array. Shared Storage LUN(s) will be configured with the same Host LUN ID across all Storage Groups. During SSS provisioning, the Standard Services that are attached to it, will be in Workflow in Progress mode to prevent any other change to the SS.

- There is no option to Synchronise the Shared Storage Service, you need to synchronise the Standard Services attached to it individually. Even though when you synchronise one of the Standard Services, shared datastores will be available on the hosts from the other Standard Service, their vSync Status will stay Partially Synchronized. If you think about it, it makes sense – what if there are some outstanding changes that require vSync and the moment we sync SSS might not be ideal to apply them?

VPLEX Support

New “VPLEX Service” allows management of Virtual Volumes shared across stretch clusters represented by two Standard Services, one in each site (i.e. Vblock)

UIM/P Nimbus will support VPLEX in LOCAL and METRO configuration.

VPLEX systems can be added and discovered in Administration –> Infrastructure Assets –> EMC VPLEX systems.

VPLEX Configuration Pre-requisites:

-

Requires Standard Services be available;

- For each host, configure FE paths to A and B of separate engines in case of multiple engines;

- For each host configure FE paths to Director A and B in case of single engine;

- Each host should have at-least one path to an A director and one path to a B director on each fabric for a total of 4 logical paths.

VPLEX Metro

Vblock 300/700 @RCM 2.5+

- VPLEX 5.0 or later

- 2 VPLEX clusters, one on each site (i.e.: Vblock) with at least one engine on each site

- 2 WAN Nexus switches with WAN connectivity and zoning completed

- Back-end VPLEX-to-Array connectivity with back-end zoning completed

- Front-end connectivity completed for UCS-to-VPLEX

- Standard director connections (i.e.: not cross-connected)

- Access to single Vcenter instance to manage both Vblocks

- Remote OS Install Agent has been configured

- Requires UIM/P Nimbus on remote site to be in ‘OS Install Agent’ mode

- Single UIM/P instance to access both Vblocks

VPLEX Local

Vblock 300/700 @RCM 2.5+

- VPLEX 5.0 or later

- 1 VPLEX cluster attached to 2 Vblocks in one site

- Back-end VPLEX-to-Array connectivity with back-end zoning completed

- Front-end connectivity completed for UCS-to-VPLEX

- Access to single Vcenter instance to manage both Vblocks

- Single UIM/P instance to access both Vblocks

Configure NTP and DNS Server(s)

UIM/P Nimbus gives you a new option to configure NTP and DNS servers.

Conclusion

EMC Unified Infrastructure Manager for Provisioning has evolved to be a very solid and useful product, it is nice to see that it is still in constant development, bugs fixed and an additional functionality being added with every version.

Well done, EMC UIM/P Team!

What other features would you like to see in new versions? Comments / ideas are welcome. Leave a comment below and I will pass it to the development team.

UPDATE:

EMC Unified Infrastructure Manager / Provisioning (UIM/P) 4.0 is GA as of 16/08/2013.

1

Recent Comments